Introduction

2025 was my first full year out of college and my first full year living in San Francisco1, and so if you want to define it this way, my first real year as an adult. What is a year? Is it an object made up of a fixed number of days and months cobbled together, sometimes haphazardly, into seasons? Or is it a cycle, only knowable by tying yourself to being exactly where you were a year ago, in a world that feels, compared to that reference frame, the same?

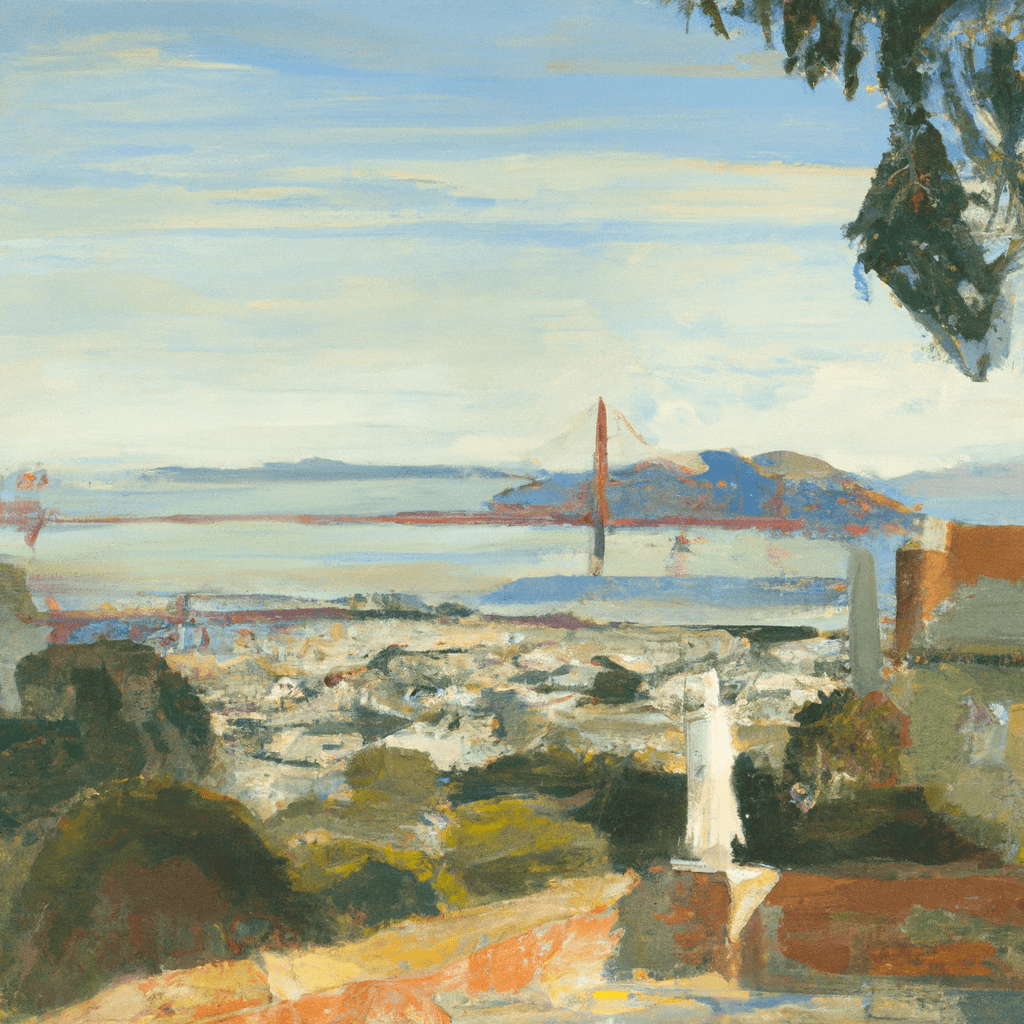

Life in SF lends itself to adherence to the latter definition; I’ve found it easy to drift away from one's mooring to the calendar as the days blur into each other. Time doesn’t seem to pass the same way it did growing up in the midwest, with seasons slowly shifting underneath you – yet doing so steadily, and that if you looked closely enough you could see nature tapping its foot to keep time. Even if you got distracted by the motion of life, one day you would look out the window expecting to see green leaves and instead see the packed snow drifts reflecting the light of the moon, and snap back to a sense of when, so much like the sensation of falling that unexpectedly rips you away from approaching sleep.

A full year is just enough time to spend half of it learning what you love in a city and the other half learning what you hate about it, and then to see things under their true light and recognize that much like you or I, it is imperfect, flawed but real – and that some of the happiness was caused by the cool breeze and that some of the detestment was caused by the angle and harshness of the sunlight.

The booths at the Fort Mason farmer’s market, which I go to most Sundays, permuted slightly after the new year. My favorite guy, from whom I bought a lovely pomegranate salsa when in season, and orange juices at other times, has disappeared. He might be back. He might be gone for good. Time continues to pass and life moves on. What you have left is a memory of what that salsa was like on a good chip.

Today is also my 24th birthday. I like that the grids are aligned – it means 2025 was the year of being 23 years old, and that the line I draw on these margins is from a thicker marker.

What’s happened?

Let’s start from the top.

Jan-March

I started the year as a founding ML engineer at the startup I moved to SF for, working on diffusion models for OOD data generation to train downstream CV models. I quit in March in solidarity with the firing of another founding employee (and mentor of mine at the company) – it was fun while it lasted!

Otherwise, my time was filled exploring the city and meeting new people and seeing new places and enjoying days of rain and sunshine, Friday night Waymos and Sunday morning farmer’s markets. I think these are the most beautiful months of the year, bright and breezy yet interspersed with some clouds and rain unlike much of the rest of the year, and importantly the UV is completely reasonable instead of eleven like it is over the summer. I managed to get sick for two thirds of March, which was made more tolerable by being able to watch the hummingbirds in my back yard flit between the newly blossoming trees.

April-June

I spent the spring doing a combination of sitting outside and reading and what can best be described as waltzing around the city with my newfound free time. These months also contained a few trips: First, a few quintessentially spring days in April back in St. Louis for our one year reunion, the soft rains falling punctuated by time with friends also in town and former students of mine. This was lovely.

At the end of the month and into May, I might have been the first person to ever fly the SFO -> JFK -> DXB -> DAC -> DXB -> MKE2 route. This presumably introduces a few questions, to which I say: long story, we’ll get to it, but the short version is a 10 day work trip to Bangladesh, and then seeing my friend Dominic as well as a few other buddies in Milwaukee.

The day before I left I got to see Hiromi Uehara at SFJAZZ3, a jazz pianist I’ve been listening to for a decade, in concert at what seems (to me) to be the peak of her career. I’d been looking forward to this since I bought the tickets in December.

July-Sept

At the end of June, I decided I needed some focus (and a salary) and joined a company called Therap as Director of AI, briefly part-time before going full-time. I have a few points I want to make relating to this, so it merits a bit of elaboration.

Sidebar I: Therap, Bangladesh, and AI in the Enterprise

Therap is the leading EHR platform for care providers to the intellectual and developmental disability (I/DD) space in the US, and after 20+ years of operation, its users range from large providers to individual group homes to the states themselves. The platform is centered around documentation. Individuals receiving care see a piecemeal staff that perform various duties, often billed through Medicare to the state or federal government, and so both for operational and quality assurance reasons, there is an immense amount of data, structured (vital signs, medication administration, rubric-based care plans) and unstructured (session notes, event reports, logs for other staff).

I got to know the folks at Therap in the summer of 2024 when I was floating around post-graduation, and ended up spending a month or so on the east coast. At that time, the company leadership was starting to get serious about AI and felt that being the stewards of ~petabytes of data representing on the order of half a million individuals came with significant responsibility: to continue to keep this secure, but also to use the data to make it easier to use their platform and product, as well as improve the care being delivered in the present and future. I ended up architecting and prototyping two initial products with them, one of which became their flagship AI launch at their national conference that year, and kept in touch.

When they caught wind that I’d quit my job, they invited me to join a biannual C-suite trip to Bangladesh at the end of April. Therap has around two hundred US-based employees, but over double that in BD, including the bulk of the programming staff. I didn’t think I’d ever have the opportunity to go to Bangladesh in the future, and that it would be a way to experience somewhere so different than the US, to have a good story to tell, not to mention that going with people who have done this dozens of times and know scores of locals in Dhaka would be not only the only conditions I’d be able to make this trip under, but genuinely interesting conditions. It would also be a serious work trip, and I wanted to continue the AI strategy related work I’d been doing.

So, I said yes, why not, and I got on the plane to fly to NYC to join the rest of the team on their pathway to BD. It would have been faster for me to route via Hong Kong, but again part of the draw was the assurance of being able to touch down in Dhaka (inevitably jet-lagged and crazy tired, since I can’t sleep on planes) and have people to guide me. The first leg to Dubai was rather pleasant – it turns out that long business class flights (that you aren’t attempting to sleep on) are actually a great place to spam caffeinated beverages and get work done. After a brief layover during which I started to crash despite taking laps around the terminal, we got on a second smaller jet and got a commemorative photo from the flight attendants (which wasn’t supposed to happen, but they did it for a family flying with a small child and then we asked if we could get one too, mostly joking, but they said yes).

At this point I gave up on trying to get work done and watched Indiana Jones4, whom I was feeling rather emotionally connected to. A few hours later we started descending into Dhaka. I’d spent some time over the prior weeks reading up on Dhaka and Bangladesh more broadly, a mix of political and demographic info and linguistic facts about Bangla, and even though I was aware that Dhaka was incredibly populous (by the new UN standards, it is the second largest city in the world at 37 million people) and more importantly, dense, it didn’t click until this descent. You get a first glimpse of the urban environment and packed buildings, but more critically you see how many people are just around – there were maybe around a hundred or so people seemingly just hanging out on or near the runway as we landed.

I took my first steps on the subcontinent – and Asia at large5 – as I got off the plane. I’ll circle back to the experiential part of Bangladesh later, but it is worth noting again how dense and viscerally developing and growing the country is. The roads are filled with an impossible number of buses and cars and rickshaws and CNGs6, construction is happening left and right, and I was given the impression that the city looked nothing like it did even a decade ago. On the professional side, we spent the next ten or so days in fourteen hour days of meetings. My focus was on defining AI strategy for the company in the coming year, meeting everyone involved with ML at the company, and re-organizing those engineers and teams.

So, a few months later, why did I decide to sign on full time at what some in SF would use the euphemism “legacy company” to describe, and not some early stage startup or lab? Common wisdom in the Valley is that these companies will wither, led into perdition by sclerotic organizations, to be replaced by new institutions like a snake shedding its skin. I’d contend that this isn’t an inevitability, nor should it be. In some cases, it makes sense – maybe Netsuite or Salesforce deserve to die or at least lose their glory, like IBM and Xerox before them. These are cases where a faltering leadership team missed technological transitions, and where the direct harm was relatively inconsequential.

On the other hand, some businesses both can and should evolve by themselves. In the case of Therap, I was convinced that the company leadership got it, which with respect to AI is rare, evidenced by the number of organizations getting robbed blind by Oracle salespeople or other groups peddling AI solutions at absurd markups. Healthcare businesses also have tangible externalities when they don’t stay up to date. A whole suite of people place their trust in these tools, from care providers to states to the individuals on the other side of all these structures, who deserve the best care possible.

At the end of the day, I felt that I was going to be given the autonomy and trust to drive change, the nascent opportunity for impact, and novel challenging problems to work on – both on the technical side as I’d direct products and the research agenda, and on the business side directing infrastructure buildout and organizational structure. Data security and tenancy is a top priority for the company, who runs a vast majority of the platform on-prem in east coast datacenters, so infrastructure development would mean leading a multimillion dollar GPU buyout and conducting the related capacity planning.

AI is a two-fold opportunity at a company like this, first as a way to consume data and simplify the product and all the standard ways we’re seeing it deployed, but second as an internal accelerant for processes, be it in software development or marketing or so on. Organizations that are able to correctly parse what is worthwhile and what is marketing junk, and then actually roll this out are going to be many steps ahead. Those that don’t will incinerate money to be eaten up by incumbents.

We’ve all seen the reports of AI pilots failing, or organizations where staff has ~90% reported AI use but only 6% through the official Microsoft Copilot deployment. I’d argue that this is due to bringing on the wrong people to lead these initiatives. I have no faith in the ability of standard consulting firms to understand the underlying technology, they simply do not have the staff who are up to date enough here7, evidenced by the hilarious reports that suggest using models comedically out of date or were sniped by the RAG-consulting industrial complex. Senior leadership in most places is equally unprepared. Again, if some big B2B SaaS company wants to dash themselves along these rocks, hiring consultants that can’t explain if encode or decode are bandwidth or compute bound, go for it. I don’t care8. But when it comes to companies that people rely on for their healthcare, I’m concerned when I see them make decisions like this.

Anyways, more on this later. Back to your regular scheduled programming.

July-Sept, cont.

I went home to Minnesota for the first time since the Christmas prior in August, and got to spend some time biking around9 and participate in the time honored tradition of the Minnesota State Fair10.

In September I went to Seattle for a few days to go to my friend Carson’s wedding, and extended the trip a bit to see a few friends, one of which I hadn’t seen since my internship at Microsoft. This was a nice vacation along a few axes. The wedding itself was quite lovely, a small group at a charming outdoor venue, and it was unexpectedly moving to see someone I’ve been friends with for so many years get married. Beau (the other friend I went to the wedding with) and I both ended up crying in our seats. We’ve built for ourselves a modern world where it is approaching the default to be detached – be it as plain as ignoring passersby with your airpods in, or the interiority-maxxed zoomer avoidant anxiety mantra of dodging all confrontation, closure, etc – so being witness to something so insurmountably real makes it seem toweringly monumental.

I also was reminded how much I enjoy Seattle. I hadn’t been there in the three years since the internship, and I’d forgotten how much more in nature the city feels than SF, despite all the talk here about hiking, etc11. Trees more pervasively fill the streets and pine-covered hilltops backdrop your views, Lake Washington is more accessible recreationally to kayak or paddleboard, not just on some VC’s yacht, and it’s easier to escape out into the mountains. I have such nice memories of hiking through pine-scented forest cover dripping with moss, finally breaking through the treeline to arrive at a sparkling glacial lake, or lazily paddling a kayak or paddleboard over the sweet music of the calm water lapping against the side, or sitting on a blanket on a white sand beach dotted with firepits watching the sun slowly set over the Sound and then disappearing behind the mountains to the west.

Beau and I ended up spending some time tourist-ing – Pike’s Place, a walk around Discovery Park, some hikes out of the city, and walking around Queen Anne in a light drizzle with a pumpkin muffin and a chai, feeling very content with autumn. Downtown felt ridiculously more alive than it did in the summer of 2022, I was pretty astounded by this. SLU is matcha labubu consumerist slop land and I wouldn’t want to live there but the area around there, by Pike’s Place and the Spheres was so much cleaner and bustling with shopgoers and residents and tourists.

Oct-Dec

These last few months have been spent focusing on work and routine, again punctuated by some travel.

First, back to Milwaukee in October, resulting in a number of trips to Kopps12 with Dominic as usual, and then home the following week to avoid the Dreamforce crowd in SF. In November, I flew back to Minneapolis to drive with Dominic and another friend, Jack, to Houghton in the UP of Michigan to see Dominic’s brother Seamus before he graduated, and to go to the combination trail ultra marathon and 150 lb hog roast event that weekend. It was freezing and it was a blast.

Most recently I’ve been away from SF for about a month between heading home for Thanksgiving, then two weeks (including travel) for another work trip to Bangladesh, then home again for Christmas, and now back to SF. I’ve enjoyed the snow (in Minnesota on the bookends, of course, not in BD).

Sidebar II: Politics and Development in Bangladesh

Some more about Bangladesh: The first trip was marked by extreme humidity, which makes me hilariously cranky. Those of you that know me have probably heard me say something along the lines of “heat makes me evil” or just seen it put me in a bad mood. I apologize. I’m built for the snow, okay? This second trip was much cooler, but the second we got off the plane and stepped into the airport, we entered this extreme smog that made me feel like I was smoking a pack an hour. I got sick within a few days, which honestly was probably from something I caught at home in Minnesota, but I don’t think the air quality helped (I think for the first few days Dhaka had the worst AQI in the world?).

Bangladesh sits at a moment of political uncertainty. In 2024, a protest led primarily by zoomer Bangladeshis led to the July Revolution, ousting the previous PM Sheikh Hasina and her political party, the Awami League, replaced by Nobel laureate Mohammed Yunus. The Wikipedia page does a much better job at explaining the details here than I would, but the change left the country on a fundamentally different footing. The upcoming election13 will be the first time around 40% of the country gets to vote. This is, as a western political observer, totally shocking. The existing political system alternated between dominance by the Awami League and the BNP. The first one of those parties has now been excommunicated. This isn’t to say there isn’t remaining political machinery, or old loyalists, but they’ve either earnestly capitulated in full to a switch, or are expediently distancing themselves, or have decided to name themselves “independents,” which much like in the US is just a covert way of saying you’re still on the same team but you don’t want the stigma of the label.

On the other hand, the BNP was met with extreme enthusiasm, particularly by the youth, but I’ve gotten the sense that they’ve failed to live up to some of the key promises – particularly around reduction in corruption, increased youth employment and economic opportunity, and change to the political system executed in a meaningful way. It doesn’t help that the PM, Khaleda Zia, passed away around two weeks ago as of writing, who was the meaningful figurehead. Her son might take up her mantle, but he’s currently living in exile in London and faces criminal convictions back in BD.

Corruption seems to be a particularly salient point, and hearing people talk about it reminded me of how some of my friends here have talked about politics in India. I’m sure BD and India don’t love being compared to each other in this sweeping way, but I mean this in terms of the sadness I hear from the countrymen of both nations; their belief in their people and a hope for a future that they are seeing dashed against government officials and businessmen who are more interested in enriching themselves than furthering the nation.

Given the discontentment with the system as we know it today, I would hazard that there is still enough pent up energy from the youth movements that we haven’t seen the end of political change in BD. The more politically attuned people I discussed this with echoed this sentiment. We have no accurate polling for the election (!) and the establishment is writing off some of the directly youth-focused parties – I heard them referred to with a head shake and mild scorn more than once – but I don’t see much reason to believe that the youth bloc, who again make up forty percent of the vote, would be too excited about the status quo. There are two other main political parties. The first is the NCP, who are the de facto youth party discussed above. The second is Jamaat, a more fundamental Islamist party, who are also gaining some steam.

What does the future of Bangladesh look like? What does a fitting leader look like? Does the country want to take inspiration from the development of China or the development of India, or both? I don’t have the answers. I heard arguments for a democracy, and I heard arguments for a strongman. Regardless, there’s a real sensation of becoming, that the country is on the verge or precipice of change and evolution that feels so foreign to the encumbrances of American cities. As I said before, Bangladesh is the most viscerally developing place I’ve ever experienced. You know this on paper reading the Wikipedia pages, but you can only fully understand this sitting in traffic, horns blaring on all sides of you, watching the sidewalks packed with people undulate like one living breathing mound, buses packed with who knows how many people streaming by, seemingly always inches away from a collision but never actually in one; construction teams and concrete mixers working away pretty much wherever you go; storefronts lit up at night, happy-looking friends and families and couples representative of the growing middle class drifting between them; towers interspersed with greenery, where the built up parts of the city feel as if they were still the jungle, partially because of the lush flora but also because the tightly packed buildings form a canopy of their own.

As you drive a bit further out of the city, you travel into what feels like a real-life Minecraft superflat map. These are the areas of new development that are taking over old farmland or swampland that was plowed into flat grasses. Some of it has been plotted out for grids much like the ones you’ve just left, that were built only so recently, and it’s easy to see them in your mind’s eye copied across the terrain here. What’s already there is a bizarre mix of ornate mansions across from go-kart courses (this is real by the way, hilariously), farm stands next to massive but mostly empty highway projects; some land that belongs to universities, hospitals, the military; a scattering of impressive modern mosques that sit on the field and remind me of the fantastic rocky outcroppings in the western Dakotas that unexplainably tower over the plains.

Bangladesh is excited about the future, and wants to be part of the future, more so than many places in the US. Cities here – and really I mean the perfidious boomers – want to latch themselves onto the idea of an eternal 90s, the golden hours of their lives and of America, to halt change and building and progress, to plug their ears like a child and yell to not hear the voices of the adults talking, telling them that the world is moving on without them. It’s popular to see China as the engineers who can actually build the future, and while they certainly have more state capacity and advanced capabilities than Bangladesh, I’d like to point out that they aren’t the only place in the world that is successfully building housing and infrastructure while we aren’t! In fact, I think the Dhaka model is a pretty bad one; unorganized if nothing else, but unlike San Francisco they’ve managed to build more than 5 units of housing, and probably 100,000 times that!

I got to visit and speak at three of the country’s top universities over the course of both trips – DU, BUET, and IUT14, where I met with students and young professors and CS department heads to talk about AI. Bangladesh made a very intelligent bet on the early computer age, and prioritized training its intelligent youth to become the type of Java programmers Therap employs. But, just as the enterprise Java programmer in the US looks like a deflating at best or automating at worst career choice, the country will have to adapt.

After taking a few days of lying in bed sick, I went back to the office. It was this wonderfully clear day. The fever had broken, the jetlag had ended, and the interminable smog had dissipated. Instead of a swampy murderous humid heat, it was an eighty degree day with a light breeze and blue sky and the sun shining so softly, and when I had some time between meetings, to escape from the conference room packed with so much to do, I went to sit on a tiny deck on the 6th floor, not in a chair but on the tile floor, and look out over the other rooftops and their flowers. The office is in the garden district of Dhaka, Banani, and though offices have taken over most of the quarter nowadays, it has maintained the charm and every building has blooms of different colors covering it. I let it all wash over me. It felt like childhood. In all that chaos, the rickshaws and honking horns and what felt like one billion people bustling beneath me, I was so close to an early summer day on the lake, maybe prancing through a flower garden that seems like a maze to a toddler, sunflowers towering above my head, or sitting in a pagoda looking out at the soft blue waves, or in a field of grass.

Multimedia

I read a good amount (books, papers, essays, etc etc) this year, which you can find segmented here. I particularly enjoyed Bolaño’s 2666, Mutis’ Adventures and Misadventures of Maqroll15, and Vogel’s biography of Deng Xiaoping.

I run a scrape monthly to make a Spotify playlist of the 50 songs I’ve listened to the most during that month, which you can find for the year (and previous years16) on my Spotify profile, but here are three that I feel are 1) good playlists on their own merits, and 2) particularly salient time capsules.

A quick rundown: It’s been another year of Jungle Jack and Benjamin Epps excellence. There was a great Caballero and JeanJass-led collaboration album. I might be the only guy in SF walking around listening to IAM and Shurik’n and Akhenaton (who’s had a great run of tracks and features in the 2020s for whatever reason). As mentioned, Hiromi’s OUT THERE was wonderful. I realized that Birdman has a classic in Fast Money – it’s genuinely really good. If Jackson Browne’s Late for the Sky was my album of the “year”17 last year, growing up is understanding For Everyman might be better. Back to back to back Westside Gunn albums including a quintessential Stove God Cooks feature. Hit-Boy and The Alchemist did a full rap album, following up strong from The Alchemist’s album last fall. I also enjoyed some Ray Barretto and Larry June. Spotify Wrapped has informed me that I listened to the Drake album a lot more than I thought I did, with the density almost entirely located in the spring. In my defense, reaching the point in your career where you can sample yourself is pretty neat.18

I watched The Wire from July until October. All five seasons. If you know me, you know that I don’t watch TV – I just can’t do it, it drives me nuts – so take that for what you will.

Thoughts

On Screens

One day during the fall of my sophomore year of college I decided that I was “post-phone.” Some friends may remember this, hopefully by its charm, but more likely in annoyance – there was a certain amount of consternation around the idea of picking a time and a place for lunch and just finding each other without texting. What if someone was late or plans changed or one of us was struck and killed by a campus shuttle, and would the other person just be waiting forever, never to order their chicken wrap? And yet we managed, like the infinitude of our ancestors before us, to make this work. In truth, this was made feasible on the whole by having texts on my laptop so I wasn’t completely cut off from the world, and on the music front, I’d received my dad’s Walkman and collection of tapes. Of course, in a few months I’d ended this experiment in its totalitarian form – I really wanted to go back to using Spotify and felt like I could honestly responsibly handle having a phone.

A few college friends of mine shared the same birthday weekend at the start of the fall, and junior year marked most of us moving off campus, so on a Friday night early in September of 2022 they threw a party. I got there early to help set up. After shuffling around solo cups and chairs and so on, I watched a friend set up the world's tiniest air purifier, with a sense of satisfaction proclaiming that it would help keep the air germ free for that evening. I remember actively rolling my eyes at this. We then packed like 35 people into the apartment and most of us got COVID.

Later that week, I’m in bed with a 104 fever, losing my mind. For the most part my thought process is operating like that Funkmaster Flex Otis premier, but I’m eventually consumed by the idea that the screen is ruining my life. Interacting with it in any way – to check a text or email a professor that I’ll be out of class – generates a visceral boiling anger. I eventually reached my breaking point and I spent a few turbid hours sketching out in a notebook how I could use Whisper and GPT-3.5 to intercede with the screen on my behalf, maybe to send or read out loud texts, or email, or get the weather for the day? Of course this goes nowhere, I’d have to spend weeks on the screen to make this system and at the end of the day, getting the current generation of AI to interact with your web browser or computer seems daunting to a point that I just scuttle the idea. The fever breaks after a few days, and though I think it gave me a semi-permanent IQ drop (I should have taken Advil… I decided to fight through it for the first few days which was idiotic), my sanity generally returns and I am resigned to doing all the homework I’ve missed on, aka spending copious amounts of time on the laptop.

I still think there is something innate to the screen that is wrong. Not just that social media is driving us crazy with ultra-intense mimesis or that shortform video is frying your attention span or even that a Slack ping is pulling you away from a task, although these are all true. No, I think there is something inherently wrong with the screen. It might be the blue light. It might be the high refresh rate. It might be LEDs. I don’t know, I’m not citing any science here19, but someone else must feel this right? That looking at a screen makes you actively dumber, even locked down to a blank word document with no distractions, that after about an hour or so not being on one your brain finally breaks free of the rust and actually starts moving again.

I would be completely unsurprised if we find out there is some sort of actual health concern or brain wave inhibitor or something going on here. Test this out yourself. I find it the most observable when I’m taking a walk, and I’ve ignored the screen entirely for about half an hour, and then I reach a point where I need to text someone something, or look something up, even for a few seconds before putting it away, and then my brain patterns feel different, my floaters get kicked up, the serenity is lost.

After all, growing up there was predominant wisdom that you should probably not spend more than an hour or two a day on screens, which to a certain extent originally only meant the TV, because spending more than that on the computer was relatively absurd. This wasn't just for children, the general advice was pitched at adults too -- and of course generally not followed, TV consumption habits have been large since its invention. Since then, these doctors have been disappeared off somewhere as society said “Nahhh I’m gonna spend 9+ hrs a day on a screen.” This is mildly troubling. To my best knowledge, this advice wasn't ever wrong. There had already been studies and so on.

SWEs and researchers and the greater laptop-job class are stuck in a bit of a temporary bind here. After all, their job is to live inside the computer. There’s this framing I toss around with friends – if you have a laptop job, especially one where most of your meetings are on a call, you spend ~8 hours a day (less breaks and lunch, unless you’re a consultant or something and you’re taking your lunch while looking at the computer anyway) on the screen, then you go home, and of your 4HL remaining time, the average American spends like 2-3 of those hours on Netflix or Tiktok or whatever. At the end of the day, the supermajority of your waking hours are spent on some screen! For all we’ve made fun of the “metaverse” and Zuck’s idiot pivot, most of America’s upper-middle well-educated class lives primarily online and secondarily in real life – not just NEET gamers or whatever people associate with this level of plugging-in.

To go a step further, it doesn't really feel that coincidental to me that after about a decade of proliferation of these devices, we're seeing our youngest generation losing literacy, the next developing more mental illnesses than ever before; our oldest, and those who run the country and its companies, as executives, seemingly go insane. Nationwide we're more dissatisfied with the political climate and the abilities of our leaders and their staff. Politics seems to be genuinely crazier. This coincided with COVID, where people spent the absolute maximum amount of time possible glued to technology, and has dissipated in part since. Maybe this is due to the growth of alternative views, call it competence based or the Abundance movement or Zohranism or whatever, but maybe it’s due to our leaders spending less time in the mental dunk tank.

So what is there to do about this? I print out most of the online reading I do, be it Arxiv papers or Substack posts, and I have multiple paper organizers on my desk20. I have f.lux on my computer and a triple click on my phone that makes it so orange it looks like it’s the surface of Mars and a Daylight Computer that I actually don’t use very often because I print material out. I really want to get one of the multi-thousand dollar E-ink monitors but I can’t quite justify that kind of purchase yet.

I expect we are going to see a more broad rejection of the screen. For the most part this won’t be due to my brain-inhibitor crankery (although I think people feel this without noticing it, or will at least actively feel the wave of homeostasis you get when you get off of it), it’ll most likely be because the screen is where the slop lives. Slop is AI slop, dominating visual media, but also good old human made slop, reels and classic social media feeds and ads and clickbait competing for your attention. The screen is home to all of these disreputable characters, and once we get over our annoyance with their synthetic cousins we’ll realize we really aren’t that thrilled about heading down the dark alley to meet these guys either.

I for one think that AI is actually helpful here, not just in acceleration of awareness, but as a screen off ramp. If you watch a movie or TV show set in a high-powered office set between the 50s and the 90s, there’s always some scene where the manager is power walking to their corner office, briefed by their secretary, handed a sleek folio or a stack of papers, and issues them a couple of instructions. This is, of course, all enabled by some floor somewhere full of writers and researchers and other lesser secretaries21. The proliferation of the super secretary is what I see AI enabling. Critically, this differs from the current set of AI secretaries which all seem to target the smallest version of this problem and have the UX of modern offices, not an ambitious jump back to the pre-screen world.

I recently bought a flash-drive sized voice recorder and had Claude put together a Mac app to take everything on the drive when I plug it in, transcribe it, intelligently tag the content, and populate notes. I’ve found myself wanting to take a note or set a todo while walking around or save a quote from something I’m reading, but not wanting to look at my phone for even the few seconds it would take to open voice memos, and this solves that issue. I’d put out a general request-for-company here – I think all the 24/7 recording devices are worthless, you really want something purposeful, like tape recorders back in the day (I’m saying this like I’ve ever seen one outside of media…). The best form factor seems like a ring with a small button or volume sensing that you raise to your lips and whisper into. This would extend past a notes app to sending texts, kicking off agents, whatever. It should not be a watch, I do not want a screen. It should not be an earbud, audio is super inefficient as a way to ingest information, I’m happy to walk back to my laptop later and get a large report to print out or like I have set up right now, structured cards generated from what I dictated.

I’m astonished by people that have a screentime of over anything trivial, especially people of my age or older, and of my milieu22 (ie received a good education, and are achieving at work, continued education, or are ostensibly well prepared to be doing so). What are you doing with that time? Reels? TV? Games? Why? What the hell is your problem? Why have you chosen to muddle through life like this? Even worse, if you can’t answer what you’re doing but you’re burning hours of your day – your so precious and limited life – doing? I’m not asking you to be productive, by any means, stare at the wall, I don’t care. But can you really look yourself in the eye if you spend any more than passing minutes on this crap? Moreover, those passing minutes are insidious, if you, like me, believe there is something inherently harmful to time on the screen, be it short form content or social media (the reasonable belief), or like me, you think that this holds in general, that any time on screens is harmful, no exceptions. Some time is expedient – staying in touch with friends, work, and so on, but it is a constant Faustian bargain.23

I invite you to reflect on how you are using this time. If it’s on short form: You have to go scorched earth, you should have zero minutes and anything otherwise is unjustifiable. I hear counterarguments such as that it’s how people get their “news” or are “learning” or something. Do not trick yourself. Even if you do get some positive output, is this how you use 100% of your time on Tiktok? Really? Don’t say that you need to scroll for a while to find the good stuff, that’s just gambling! If it’s spent on other social media, I concede some could be inherently social. But again, is this how you’re actually using it? Would your life really change without it? Another argument is re: destressing. Do you want cocomelon? Are you a child?? I argue that you can destress without plugging yourself into the Wall-E slop dimension, or frying your brain on the USB stick.

In the words of a wise man: “If the music does not improve the quality of your thinking / Then it’s not music, man / It’s just a theft of your time, a sabotage of your spirit.”24

The interior zoomer has to face this head on. The screen is evil. The first step for our generation to save themselves is abandoning the screen. The second is to be able to go for a walk outside with no stimuli. The third is actually rebuilding the world around us, both forming community and deciding that we can actually cause change – but we have to be able to get there.

On AI Coding

Much has been written about Claude Code, etc, as of late and I don’t see much need to rehash it. However, I do have a few anecdotes that illustrate what I feel are underdiscussed facets of the trajectory we are on. A tad bit of context: I’ve been using AI code assist for almost 5 years at this point, starting with early access to Github Copilot. I’ve been paying the $200/month Claude Code Max Plan since it was released in early May of this year. I don’t particularly like coding! I think this is where I differ from most of my peers I discuss this with – I’d be happy if I never needed to grind through implementation again, I’m in fact supremely relieved that I’m being liberated from doing this kind of thing.

I decided I wanted to do a CS / math double major my senior year of high school as a way to do an AI degree in-the-aggregate. I was part of the in-between group of AI waves – after the AlphaGo hype, but before LLMs – and I decided that I wanted to go into the space after reading work in poker by a then little-known PhD student by the name of Noam Brown. I was interested in human strategic decision making, particularly in settings with bluffing and prediction, and naively thought it was pretty straightforward that computers could beat us in chess or go by playing millions of games against themselves. That an AI could beat a suite of poker professionals, though, surprised me in a way that has since become a feeling that many AI researchers have come to know and internalize: to watch AI blow humans out of the water at something we found intrinsically human. So, won over by the idea that we would be able to develop and AI to be a superhuman strategic predictor and then understand how that AI was making its decisions long before we’d actually understand how the human brain did these things, and abetted by my math background, I decided that I was interested in this AI thing.

My high school had a research class that my tracked cohort25 ended up in our senior year, where we were given freedom to work on pretty much whatever we wanted in the sciences and access to a full-service lab. Instead of getting a cool lab coat and using the CRISPR machine or something equally as real, I opted to sit on my computer. I ended up working on RL for incomplete info games and attending a regional science fair (only because it was required for the class). One thing led to another and I was named an ISEF finalist, albeit for the magical year where nothing actually happened due to COVID. Alas, no trip to the Anaheim convention center.

And thus I’ve come to affiliate myself with the math side over the software side, which I see as a means to an end. Of course, the engineering and systems level work is important, especially given how empirical deep learning has been over the last decade. Regardless, I was quick to jump on tools that would let me write less code, and I didn’t have the surrounding purpose-anxiety that a lot of SWEs have, no attachment to the craft or feeling of control by being an expert in the nuances of BeanFactoryBean<Bean<SelfBean>> or the right terminal one-liner to grab what I need to from the DB or logs to be an effective on-call fixer, or the calm of cleaning up the codebase dutifully after the high-powered initiative-havers left messes on their crusade to add more to the world.

I truly wish all these people luck – they are going to need to find purpose somewhere else, and quickly. If your work is best described as a JIRA jockey or even the noble maintainer and bastion against outages, there is going to be a robot that does what you can do better and faster. And look, I think there are going to be people writing super high performance C or something, but if you are writing enterprise Java or web slop or Python, I hate to be the one to tell you, but this isn't you.

One thing I’ve tried to do is warn people, in a totally inconsequential and ineffective way. I tried really hard to get two of my friends to not take their “R for statistics for social sciences” course our sophomore year because – and I’d wave my hands around in a way that probably didn’t inspire confidence as I said it – there would be a magical robot and genie that would do it for you, and don’t waste your time learning R syntax! Concurrently, in the fall of 2021, I vibe-coded for the first time (I like this phraseology because it makes it sound like I was taking a drug or something). One of the CS classes most sophomores took had a unit to learn and write PHP. I (correctly) identified that this would be a total waste of my time and something I would never have to do again, and used my early access to Github Copilot (which I think I was grandfathered into because I had GPT3 early access? although it could have been a different program, I don’t recall) to do the entire thing for me. Nowadays, most people argue that Cursor tab-complete isn’t vibe coding, and you need to take your hands off the wheel and use a TUI and not look at the code for it to count. I’d argue that since I had no clue how PHP worked, and wasn’t interested in finding out, my flow of writing a comment with high level requirements and then watching the tab complete fill out the result was essentially the same thing.

I was partnering in this class with one of my roommates and when we worked on this together I just called Copilot “the genie” and more or less refused to elaborate, beyond it being the magical creature that writes code. I still think this is the right framing in many ways; Claude and friends are these capricious spirits who ooze inhuman power, but need to be bound and instructed and cajoled the right way to get what you want to done instead of say, turning you into a piece of furniture and then disappearing into the wind.

Now, I bring this up for a few reasons. Most logistically, because there was no AI policy in the code of conduct at that point (and hey, if there was, statute of limitations by now, right?), and I enjoyed about a year or so of impunity with respect to these tools before ChatGPT’s general release ruined the fun. In my opinion I broadly did the right thing since I took on a “spirit of the game” mindset – PHP was worthless to learn, so I might as well save my time. My geology quizzes that were open internet open book could be copy pasted into the GPT 3.5 playground, since it was really just a time saver and I was taking the class for fun anyway (and unlike most of the class, I actually went to and enjoyed lecture so I figured that was the important part).

Here’s another angle: I’m part of the first cohort that actively traded off limited knowledge-acquisition and time away from the mechanics of software and towards other aspects, for example pure math – useful, kinda – and taking history classes in France and sitting in a lawn chair on the quad reading in the sun – less “useful” but if technologists are going to end up with all this money and power wouldn’t you rather them be a little more informed about the world? It’s worth extrapolating what having less singular-minded gatekeepers of this type of labor and product means. Regardless, the current model of a CS degree as a trades-style preparatory program is misguided. I tried to minimize “applied” or “practical” courses that I saw as transient or already out of date.

Another nascently pro-social impact is in what becomes economically viable. I believe that AI will not just be a force to automate existing labor, but expand the impact upwards and downwards. There’s been significant commentary on the former (and by upwards I mean multiplying leverage towards bigger projects), but I’ve seen less on the latter. It is somewhat of a double edged sword – on one hand, bottom-of-the-market knowledge work is just inherently poor quality, in the same way Amazon and Temu plastic crap is. It pollutes, not by creating floating islands of trash in the ocean in this case, but by flooding the web with slop. We arrive at the same uncomfortable consumption dichotomy we do with the plastic crap. A century ago, some given American family would own one set of bowls, one or two outfits, but of good quality that would last them years. Now, a family living paycheck to paycheck can achieve material abundance of plastic dishware and fast fashion. We tried, for a while, to frame this politically and encourage people to focus on conversation over consumption, to which the average consumer totally rejected. The conscious coastal elite might thrift and purchase a wooden cutting board (but there’s like a 30% chance they did that for microplastic reasons, and it’s not like those guys are really woke about the environment), but the rest of the country likes buying things, and we’ve lost the political will to tell them to stop.

We’re already seeing this with AI. One of the most bizarre elements about spending time in Bangladesh and Dubai was seeing how prolific AI-generated graphics were, from menus to advertising to more. Using AI-generated copy or imagery is a status marker – if you can afford bespoke human creation, it shows you’re boutiquey, or organic, or so on. If you’re struggling to make payroll, saving this human labor cost is an easy choice.

I said a few paragraphs ago that there was a pro-social element to this, and even though what I’ve just described is the opposite of that, I believe there is a positive shift on the net. The first thing to internalize is that slop isn’t solely AI generated. The trajectory of the world would have led companies to contracting copy and graphics out to the lowest bidder in the global economy, which wouldn’t be much better than current models, and likely worse than future models, despite all the whining about AI slop today. AI raises the floor and lowers the cost.

What this does do is open up the bottom of the market, particularly for unsaturated fields like software, not just in “personal software,” as is much discussed. Projects that would have required months of dev work by full teams, targeting market segments that frankly weren’t profitable – not “venture scale,” goes the popular euphemism, i.e. not worth solving problems for because they can’t break out the piggy bank – are suddenly positive EV. Now, the same tools could let the most talented teams go after even bigger targets (the upwards expansion), but this is potentially a place the lower echelons of the software labor market, perhaps now laid off from FAANG in downsizing, could go. Or, just simply not economically prohibitive for the well-meaning.

Consumers (and businesses) have a very simple reaction to the price of goods. If something is priced crazy high, they try to do it themselves. If it’s priced crazy low, they’ll opt out of the hassle and make the purchase. AI changes what falls into these categories. Contra the oft-parroted idea that AI upvalues trades, if I can figure out how to fix my sink with Claude I’ll forgo hiring a plumber (which now seems overpriced). Alternatively, as the price of the same software suite craters, companies will rip out their ERPs and such to replace with Claude in a weekend, or new software firms will offer that same ERP for a fraction of the price.

Certain consumers are elbowed out of the market entirely under the current state of things, and this is what I mean by bottom-of-market expansion.

On AI Diffusion

The Twittersphere has not been able to shut up about Claude Code for going on two months at this point. Claude Cowork seems to be the most viral lab launch since ChatGPT. I think you can attribute some of this to Nikita screwing up the algorithm and incentives and blah blah blah, but concurrently we have economists and historians and mathematicians using these tools to get work done, not just software engineers.

At the same point, the folks over in the Bluesky Exclusion Zone seem to be malding26 for a salary and are convinced that LLMs are hallucination machines or stochastic parrots or can’t reason or so on and so forth, and are able to smugly point to studies that are somehow using Claude 1 Haiku, GPT Babbage and Galactica as the benchmark. It’s honestly fascinating. I read a Substack post the other day that decided to take a hardline stance that “using AI ethically” was impossible not only because of *waves hand* datacenters and the environment, but because “intelligence” as a normative term is rooted in eugenics and thus is problematic.

I understand why creatives, artists, writers, etc, harbor such hatred for AI. At the root of it, AI just isn’t a very good writer or artist. The slop arguments are real – I also get mad when I read an article that’s obviously AI, or see AI generated marketing or a menu or so on. It makes you recognize 1) the laziness and lack of care most people have, 2) the contempt businesses have for their consumers, and 3) how hilariously low the bar is for anything of quality. More troubling, though, is that the average consumer doesn’t care and is willing to elbow people out of the way to make space at the trough. I’m not sure if these types have expressed this in those words directly, or are even directly conscious of this, but I think that this is the fundamental cognitive dissonance driving the emotional response that (to continue the now tenuous farm animal metaphor) functions as horse blinders to the movement of technology. I was being a bit snide earlier about the outdated arguments people make, but it’s obvious that there was a point in time when people read up about things, found the evidence that supported the conclusions they wanted to draw, and then totally distanced themselves from the fastest moving field of all time. I still see people talk about AI generated hands and it feels like talking with someone with dementia that still thinks the USSR is around.

I generally view cognitive dissonance to be the primary explanatory factor for most human reactions, and as such something that needs to be spotted and managed. AI is drawing salience to how boatloads of human-generated writing and art is also slop. This dawning realization combined with capability increases and general further acceptance of using AI tooling, first in engineering, then in the social science, then in parts of the humanities, will make these dissenters feel surrounded, betrayed, and isolated.

There will be a fundamental rupture in the conception of why certain work is valuable (is it because it is ensouled? created by a human?), although to a certain extent I find that the current critical conception of art and literary work already accounts for this, and it’s the vulgar interpretation of these that is contradicted. I’d like to write more about this in the future so I’ll be brief for now, but the traditional Marxist definition of quality of art is in relation to its politics, while the fascist definition is in relation to its aesthetics – compare the contemporary art film or piece, often critiqued as ugly or unpleasing by the public but meaningful to the academic, to the neoclassical marble statue, which the academic finds empty of meaning but the layman soyfaces at and calls beautiful. The human vs AI breakdown buckets nicely when using these definitions, even in the scenario where AI can perfectly match all the aesthetics of human creation and learns, for example, to stop using em-dashes.

The near future of AI tends to be framed as being between the two groups I’ve described above – the SF true believers and the humanist denialists – but I find this to be broadly a distraction. I’m much more concerned about how normal people, who have jobs and mortgages and frankly aren’t even all caught up to the pace of technology pre-AI, are thinking about it. There is a lot of fear here, not just from folks that work email jobs that are nascently on the chopping block, but even from those who are working mostly hands-on jobs that would need humanoid robots to replace them. I see AI researchers and policy folks wave this type of crowd off as either uneducated (not with respect to their actual level of education, but to their AI understanding) or irrelevant, which is a mistake. These are the people that will vote, demonstrate, or protest against change (maybe in truth because they don’t understand it) and can be an obstacle to both those that want to encumber or enable AI buildout. They can either shut down datacenter projects (blocking takeoff) or throw their entire weight behind chatbot bans, missing that the real usage and opportunity is from agents (gaining a moral victory but erecting no real barriers).

This is why I tend to feel that the AI policy sphere’s focus on long term safety, takeoff scenarios, x-risk, alignment, etc, are out of proportion and frankly putting the cart before the horse. Instead, there are two main areas to manage: The first is public perception and readiness, which I’ve already discussed briefly; and the second is what I’ve taken to calling close alignment27. This, in opposition to perception and readiness, relates to direct impact in the coming years to decade. It concerns me because we’ve already seen pretty damning failure on this front by the labs, who concurrently have been spending immense resources (salaries, minds, etc) on long term thought that just doesn’t exist yet.

The quintessential example is everything relating to 4o and sycophancy, and we’re all familiar with the stories of users going off their meds or divorcing their partners or killing themself / others, and while horrific I think focusing on the sensational misses the forest for the trees. There have always been truly crazy people, and while I think 4o has made it easier for these people to snap, it’s not a societal step change. On the other hand, there are an uncountable number of normal people that now have “Chat GBT” brain (somehow these people have largely coordinated to not know the name of the tool they use, despite their eagerness to tell people what Chat GBT told them about vaccines). Now a lot of this isn’t AI’s fault specifically, there are a lot of people who are going to get tricked by things they read or hear, whether it was on MSN fifteen years ago or a Tiktok “money hack” that boils down to wire fraud, but it is happening – honestly, as the models get smarter, I’d rather have an elderly relative following Claude Opus 6.5’s advice instead of “doing their own research” on Tiktok.

On the other side, we have a whole new basket of harms that our regulatory state doesn’t have antibodies for. The Grok deepfakes are particularly salient here, and even if Newsom is going to launch some sort of investigation into xAI, it should be astonishing that we even got here in the first place, between Elon giving it the green light (and then encouraging it) and the government not really having any capacity to do anything about it. I use the word “should” here, because I’m actually not very surprised at this course of events – and my point to AI policy advocates is that they should be spending a lot more of their time trying to manage and educate government officials around these type of issues instead of explaining who Yud is to them.

I honestly think this is a much more interesting line of reasoning than hand-waving about paperclipping is (which I get that intellectual types want to debate about, because it’s more stimulating than thinking about how minute mechanisms need to change today). In general, is this equivalent to the government's responsibility for the health and safety of its citizens? It doesn’t look like we can even do that, see the ACA. If we had to start a new country to follow the ideas we set out to have America espouse, how different would it look from America as it is today? Can American leadership make this pivot? How? These are questions that go beyond AI.

Some of it is the equivalent of the way we should be forcing Twitter to be regulated better. The same moral bankruptcy that drives the Nazi site is what causes Grok undressing girls to be a Nazi site paid feature. Obviously the tech itself should have limits but these are both symptoms of the larger issue, and solutions that address one but not the other are shortsighted bandaids instead of anything holistic. Some of it is the equivalent of how we want to let our citizens treat themselves. Chatbot brain is a symptom of illiteracy brain, not a cause. We get these people off of AI and they get onto Reels. So we ban reels and they get on daytime television. It should be harder for these people to get nuclear grade versions (Steve Harvey is better than the sycophancy fountain), and this is especially true for the youth (see phones in schools, etc), but there is an underlying issue. Do we treat this like, say, weed, which also falls in the boat of “idiots want to do this and make themselves stupid because it feels good?” We’ve already decided as a society that this is fine actually, mostly because we think the enforceability is a worse issue than the base issue itself. Even China, who tried to ban video games for kids, has failed miserably and all their youth are addicted to anime gambling apps.

It is in many ways just a grim reflection that most people don’t care. We are going to see a bifurcation in media the same way we have Whole Foods / McDonald’s – Amazon plastic crap for the mind. Again I’m not sure if the American educated class is ready for this and will be pretty severely jaded as people willingly trade away from their white collar labor to slop. Soon, everything will be Glup Shitto. The positive outlook on all this is that we, societally, are realizing what we’ve done to ourselves. I truly think human slop would eventually catch up, whether it is cocomelon or Indonesians on twitter pretending to be white women, and it is good that we are realizing it is corrosive and making our kids stupid and deciding to get around to banning phones in schools etc. Remember – never scroll, not even once.

On Datacenters

I have a lot to say here, and as such will defer it to future writing. A certain amount of what I want to say re: energy, water, etc has been covered (i.e. debunked) thoroughly by the wonderful Andy Masley. A few quick flag plants though:

1) I hope companies will try to manage public opinion in good faith. It will not be hard to sign water-use statements or renewability pledges, given these aren’t real issues, and hopefully that will take down some of the broader slopulism we’re seeing right now. However, if they need to play hardball, I think it is completely reasonable to charge municipalities different rates for AI access. If your metro area doesn’t build data centers, your businesses are going to have to pay more for Claude. These guys are going to either get really mad or go elsewhere (especially if AI is going to be as all-consuming as I and most others think), which will force cities and states to push these projects through (albeit at a delayed pace, so this is more relevant to the eventual full deployment vs the next suite of training rounds).

2) As capital substitutes for labor, it will become environmentally greener to use AI instead of paying a human. It probably is true already! This is relatively uncomfortable for the slopulists.

3) I think a lot of people, if you sat them down and patiently explained that a datacenter was really just a big computer, not a center where data lived, or a big evil AI box, or sucking up all the water permanently, or giving people “turbo cancer,” would tell you that they didn’t care and they’d like to blow it up. And then, if you patiently explained that most everything they do on their phone runs in a data center, and if you blew it up they couldn’t use Tiktok or Instagram or log onto Slack to do their job or use the 20-25-30 percent tip payment kiosk at their favorite coffee shop or listen to music on Spotify or take an Uber28, or that everything they’d want to do would 100x in price due to Upstream Factors or whatnot, they would tell you that they still didn’t care, and they would still like to blow it up, and that tech is ruining our lives anyways, even if they’d get an inkling of an idea from the last few things on that list that it in fact isn’t. And then you’d say okay, fine, I’ll blow it up, and we’d go and blow them up together in the joy only destruction can bring, and they’d go back to their daily life and realize how impossibly different it is and that they did in fact want all those things and now they were miserable, and didn’t actually mean it when they said they wanted to blow the data centers up. You could try to explain to them at this point the concept of a revealed preference but frankly it would be a little too late for this to matter.

On Waymos

I love them!

Seriously. I do. I was just at CES and got so into telling the group I was with about how they worked, where they operated, the different types of cars, etc, that a guy came up to us and started asking me questions, which I fielded, until he asked me what it was like to be chosen to staff the booth. I had to sadly inform him I wasn’t official booth staff because I didn’t, in fact, work there.29

Beyond the actual surface level experience, they are a powerful example for techno-positivism, an invention that I view as almost unambiguously good, a solution to a problem we’ve created (texting and driving at a small scale, car-centric urbanism at a large one) – but most importantly, legible to normal people as an improvement in the physical world. As such I think it’s important to understand how this fits into a model of progressivism for the latter half of the decade. I’ve spent some time playfully debating Waymos30 with friends who I think are at risk of falling into the slopulism trap. Moving to stop Waymo proliferation just seems like a massive own goal on so many of what I consider to be tenets of the progressive cause. My goal here is not to write up a factual takedown (although many of the criticisms are completely defeated here, for example emissions concerns re: computation, traffic/congestion in cities, etc), but to just sketch out some of my thoughts.

Waymos and Urbanism: The current model in SF, and other similar cities, has been to focus our energy into dumping inordinate amounts of money into projects and then have them be utterly mismanaged. Criticism of this model of management has been levied more broadly against the Biden administration, and fairly so. A majority of the commitments I and others were excited about were easily reversed by Trump because either money was allocated without any followthrough on accomplishing work, or the work being done was so disorganized that it crumbled under any pressure, or Trump got to claim credit for. This isn’t any new analysis, progressives have started debating this inside and out, to the point that random boomers are sending Ezra Klein death threats.

I do see one failure mode that manifests both in general and with respect to Waymos – there is a general unwillingness to allow any form of private sector involvement, equating any criticism of the million dollar toilet or unsafe transit experiences, and suggestion that we could also have improvements to the situation to say, defunding the Post Office. This is a category error in my mind. The Post Office privatization ghouls want to destroy one thing, that we run at a loss for societal good, to serve people that wouldn’t be served otherwise, at the altar of profit. The key difference is the Post Office already exists, and runs fairly well! This can’t be said of many of our urban staples. Waymo doesn’t endanger public transit. They will end up paying taxes that support public transit. It’s not like the city is trying its hardest to support public transit itself! I think over half of the people on my bus route (who are rich white yuppies) aren’t paying the fare. There is sparse enforcement, but not enough to make these people care.

The above illustrates that there is just a lack of political will and state capacity to improve the current situation. Some of the safety benefits of Waymos could be accomplished with speed limiters – too bad we will never ever be able to put these in place! On the other hand, you can take a perfectly safe Waymo ride today. I think these types of arguments also make two main analytical errors. First, from a perspective of substituting individual drivers, human drivers will be less safe, even when restricted, assisted, whatever it may be. Even if you accept what I think is a poor argument that the comparative statistics are measuring against the wrong population of drivers31 Waymos are superhuman defensive drivers. They will swerve if a kid falls off his skateboard into the road. They will swerve if an oncoming drunk driver runs a red light. They have the advantage of having an inhuman reaction time and calmness under pressure. The second error is regarding the externalities of Waymo as a replacement to Uber. Removing a random human from the situation simply makes this kind of thing much safer. There are over 2,000 reported sexual assaults tied to Ubers/Lyfts every year. It doesn’t help that a large portion of the ride app demand is when people are at their most vulnerable – inebriated or trying to unwind, not being on alert to defend themselves or thinking about tricks such as getting dropped off blocks away from their home to conceal their address. I think we will look back at resistance to Waymo as cruel – a choice that puts maintaining the status quo in front of the lives or wellbeing of fellow citizens.

I lived in Paris my spring junior semester. I rode in a car maybe twice – I walked or took the metro most places (my main line was fully automated by the way!) and didn’t want to take an Uber. But, both of those times my drivers almost got in crashes because they weren’t following basic traffic laws. As much as Hidalgo has been successful removing cars from certain roads (which I wish American cities were able to do), there will always be a non-trivial amount of auto demand by residents and tourists. If you’ve walked down Parisian streets, you know how crowded they become when cars are parked, or how jammed parts of town become at peak hours. AVs would reduce the requirement for locals to own cars, and figure out where to park them. For the tourist angle, we’d rather have fewer AVs more efficiently service the rideshare demand instead of a glut of Uber drivers circling the periphery like hawks.

On congestion: A common smarter argument is to worry about the world where Waymo share increases. This argument relies on the idea that this increase in Waymos will result in an absolute increase in cars in the city, and that commuters aren’t offset, or not in a net-positive way. First off, I don’t know if these are reasonable expectations, but for the sake of argument let's accept these so I can push back along other lines. The biggest failure of this position now is assuming that Waymos are going to contribute to congestion equally to regular drivers. I don’t think this holds – Waymo can coordinate their fleet to a degree that is completely impossible for individual drivers, or even the city, to accomplish. They also have dedicated teams working on congestion already, with respect to how the caution of the vehicles results in interactions with others using the road, be it drivers or buses or bikers or cable cars. At the end of the day, I think congestion is an engineering problem, and as such, it is solvable by a strong engineering team.

At the same point where a Waymo percentage would become non-trivial enough to cause meaningful congestion, you also now have a significant portion of auto traffic that doesn’t need central parking. This is an incredibly exciting prospect, and a nominal goal of progressive urbanists, that I think is only practically accessible through this route. Again, to return to the Paris example, or looking at Shanghai or other Chinese cities, who have many more political levers to pull to work towards this goal, and levers indeed they have pulled, haven’t gotten close to achieving this. I don’t want to pretend that every individual will just trade off to Waymos, or that we want them to do this instead of taking transit, but to be perfectly realistic, Google will be able to scale the Waymo fleet more quickly and more cheaply than SF or other cities will be able to build transit. My hometown of Minneapolis’ light rail project is going to finish (at least) 8 years over schedule and two billion dollars over budget. Of course, transit needs to start somewhere, but a similar investment in Waymos would make a larger dent on the percent of the population that owns a car.

Somewhat uncomfortably, there are practical realities here too. There is a class of Americans that are much less likely to take transit and can already afford their BMWs. This is less true in places like NYC or Paris, which have secure transit systems, but the reality on the ground in SF is that there is significant sector of people that wouldn’t trade off car ownership for transit (even if improved at the pace we expect within the decade at the most optimistic rate), but would for bountiful Waymo access. This is even more visible across most suburbs.

Waymos and Labor:

Labor is another axis along which both the Waymo debate and national debate coincide. Broadly, there is a wing of progressive policy that feels the need to support to the death certain labor groups that have had historical power. Big picture I think this is anti-progressive in itself – endless support of dock workers or train conductors works against the health, quality of life, cost of living, and wellbeing of the average American. On net, these jobs are not worth the tradeoffs32.

On the Waymo side of things, this manifests in a bizarre defense of Uber. For years, progressives have correctly identified Uber as a morally corrupt company, who mistreats its workers, and pushes local and state governments around with its weight, wealth, and lobbyists. At some point though, exploitative gig-work has become a backbone of American labor and must be defended. I don’t understand this. As a progressive, I’m mortified that some of my ideological companions are so insistent on backing gig-work-share-cropping! We do not need to stand for something just because it has existed for under a decade, the sanctity of labor doesn’t need to apply to everything. We need to make smart decisions, not reflexive ones.

I do think this is a spillover of boomer pattern matching, which is broadly a huge issue with the political environment as it stands. To put it as frankly as possible, a large part of our government and political actors' brains have crystallized, which isn’t their fault, it’s just what happens to their brain chemistry with age. They are unable to process new things (well, lots of them, there are of course exceptions including some of the lovely and intelligent boomers I spend time talking with). Instead, they rely heavily on pattern matching to their youth, and see the old struggles of labor, environment, etc, in everything. This is why you see people with “No Nukes!” signs at data center protests. It’s sad. These elderly folks want to feel important, but don’t understand that the underlying terrain has shifted, more than they can comprehend, with the technological, political, and societal change they themselves spurred. I truly view this with pity. The defeat of intellect by age is one of the great tragedies, and seeing it front and center in our country is demoralizing. It is bipartisan, it is everywhere when you start looking for it. I just read an anecdote in Dan Wang's book that China has these elderly folks that still want to be politically involved run local propaganda operations, which I found to be a very intelligent way of channeling their energy but keeping them out of the way of real decision making.

As a sidebar, the boomer controlled state discourages the youth. The obvious mechanism is direct, by blocking change through their seats in government or by flooding the zone at local hearings, scheduled conveniently at 2pm on a Tuesday when the retiree class are the only ones not busy with having a real job33. There is a subtle second mechanism – I think that there is a large cohort of people my age that grew up in the stagnant boomer world and basically have seen minimal change instituted or even tried since their childhood, and then haven’t studied enough history (or haven’t internalized it) to realize how utterly bizarre this is in the context of America. Our country’s past consists of moments of radical change strung along into the story that we know, with new forms of governance and new institutions birthed in reaction to technological or political or social change, without fear of destroying flawed parts of the foundation and rebuilding on top of them.

The current stagnation is due in part to how fast the current terrain has shifted under our feet (and again due to boomer unwillingness or unknowingness). With technology alone, there has been less time to get on top of and understand social media than say, the telephone. I don’t think this should stop us, and in fact it should push us to pursue even more radical change instead of relying on our sclerotic structures to handle it for us. Most people my age remember the embarrassing congressional grilling of Zuck (“...we run ads senator”) in 2018, and while I’m not saying we should have had me and my other sixteen year old classmates be in charge of senate hearings, I do want to suggest that we might have done a better job.

Waymos and Policy:

Cities are our societal breeding ground for development of technology and consensus. I think that on the net, the most lives saved from, say, drunk driving accident prevention, will happen in the suburbs. We cannot just start there however, Waymos are going to have to gain success and consensus from urban deployments before societally we roll them out.

A common anti-Waymo (specifically Waymo) argument is that they cost too much and will never be profitable. First, this discounts the ability to produce the next generations of vehicles at a fraction of the current cost. The Jaguars cost an inordinate amount of money because they were the only option in ~2017 or whenever they did the original order for an EV. As Waymo shifts to Hyundai, who is bringing their operating costs down through dramatic automation, and can install the sensors pre-fab, we are going to see this price crater. Regardless – this is still a very engineer-brained take that misunderstands how society works. We are willing to run pro-social services at a loss. We will go out of our way to achieve things like this. It is our government’s mandate to provide for the health and wellbeing of its citizens, and viewed from this angle, it is relatively bizarre that we have the death machines all over our cities.

In my view, once the technical novelty is proven out a bit, we are going to see sweeping public and policy support for Waymos. People are going to realize that we are not going back to the pre-smartphone world where people aren’t playing Candy Crush at the red light or on the HIGHWAY. Look around next time you’re driving – this is the game I play with people on the fence about Waymo. Once you consciously realize how many people are driving distracted you get really antsy about the whole thing.

All in all, I’m not very concerned about policy obstacles to Waymos, just because I think they will be incredibly popular along the axes you need to get local voters convinced. A fault proof path for this kind of thing is to leverage suburban moms, the elderly, and the disabled. I say this somewhat cynically, but I also believe Waymos are genuiely a marked improvement for all of these groups.

Moms are incredibly paranoid about the safety of their kids – we’ve seen this with the absurd backlash and panic about banning phones in schools (how will I contact my kid??), historical grassroots activism around drunk driving, and so on. It is super easy to sell to parents that there is a trackable, provably safer mode of transit, that is also popular with the kids themselves because they get the autonomy that not having a parent actually in the car would provide.